One problem I see crop up pretty regularly with Terraform codebases is a lot of repeated code, this repeated code is usually to facilitate the deployment of environments or multiple instances of the codebase. An example of this might be some networking and a virtual machine that hosts a web application this code is copied and pasted into different folders or repositories and some configuration (usually minor) changed. In this example if you had three environments you now have three copies of the codebase that needs to be maintained and kept in sync, this puts additional (and unnecessary) strain on your engineers. So, how would I fix this?

The answer to this question might seem potentially easy but in my experience it ends up being a little more complicated. To me this all starts with removing the repeated and often near identical code, and realistically your non-production environments should be identical to your production environment(s) perhaps just at a reduced scale. This can all be solved through the ingestion of configuration into our Terraform codebase/module. You wouldn't have three copies of your applications code now would you?

In my mind there are three ways that this can be achieved:

- Terraform Variable Files

- Usage of higher-order modules

- yaml/json configuration Files

Lets go through each of these scenarios more in depth to find out how they would solve the problem of not repeating your code. But first, lets write the code that would be used in the copy+paste scenario and we can improve on it to fit each of the ingestion scenarios.

First we setup our providers.

provider.tf

provider "azurerm" {

features {}

}

Next we will setup all our networking infrastructure, as you can see we are statically assigning all of our values here.

network.tf

resource "azurerm_resource_group" "this" {

name = format("rg-%s", local.name_suffix)

location = "australiaeast"

}

resource "azurerm_virtual_network" "this" {

name = format("vn-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

address_space = ["10.0.0.0/23"]

}

resource "azurerm_subnet" "this" {

name = format("sn-%s", local.name_suffix)

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["10.0.1.0/24"]

}

resource "azurerm_public_ip" "this" {

name = format("pip-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

allocation_method = "Dynamic"

}

resource "azurerm_network_security_group" "this" {

name = format("nsg-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

}

resource "azurerm_subnet_network_security_group_association" "this" {

subnet_id = azurerm_subnet.this.id

network_security_group_id = azurerm_network_security_group.this.id

}

resource "azurerm_network_interface" "this" {

name = format("nic-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

ip_configuration {

name = "config"

subnet_id = azurerm_subnet.this.id

private_ip_address_allocation = "Dynamic"

public_ip_address_id = azurerm_public_ip.this.id

}

}

Create are naming suffix in the form of a local variable, SSH key, and the virtual machine.

main.tf

locals {

name_suffix = "aue-dev-blt"

}

resource "tls_private_key" "this" {

algorithm = "RSA"

rsa_bits = "4096"

}

resource "azurerm_linux_virtual_machine" "this" {

name = replace(format("vm-%s", local.name_suffix), "-", "")

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

size = "Standard_F2"

admin_username = "adminuser"

network_interface_ids = [

azurerm_network_interface.this.id

]

admin_ssh_key {

username = "adminuser"

public_key = tls_private_key.this.public_key_openssh

}

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

publisher = "Canonical"

offer = "UbuntuServer"

sku = "16.04-LTS"

version = "latest"

}

}

Finally we will output our private key so that we can access the server!

outputs.tf

output "ssh_private_key" {

value = tls_private_key.this.private_key_openssh

sensitive = true

}

Warning! Do not do this in any real world scenarios, this should be put into a secrets vault of some description!

Variable Files

In order for us to be able to use tfvars files we first need to use input variables, so, our first thing to change is removing all that static assignment and utilising variables instead!

variables.tf

variable "location" {

type = string

description = <<EOT

(Required) The Azure region where the resources will be deployed.

EOT

validation {

condition = contains(

["australiaeast", "australiasoutheast"],

var.location

)

error_message = "Err: invalid Azure location provided."

}

}

variable "environment" {

type = string

description = <<EOT

(Required) The environment short name for the resources.

EOT

validation {

condition = contains(

["dev", "uat", "prd"],

var.environment

)

error_message = "Err: invalid environment provided."

}

}

variable "project_identifier" {

type = string

description = <<EOT

(Required) The identifier for the project, 4 character maximum

EOT

validation {

condition = length(var.project_identifier) <= 4

error_message = "Err: project identifier cannot be longer than 4 characters."

}

}

variable "network" {

type = object({

address_space = string

subnets = list(

object({

role = string

address_space = string

})

)

})

description = <<EOT

(Required) Network details

EOT

}

Above we have setup variables for our location, environment, project_identifier and the network. In a more real world scenario we would likely pass in a lot of the Virtual Machine configuration as well, but for the sake of keeping the code simple we will just do the bare minimum. With all those inputs now in place we will have to update the codebase to use them!

main.tf

locals {

location_map = {

"australiaeast" = "aue"

"australiasoutheast" = "aus"

}

name_suffix = lower(format(

"%s-%s-%s",

local.location_map[var.location],

var.environment,

var.project_identifier

))

}

resource "azurerm_resource_group" "this" {

name = format("rg-%s", local.name_suffix)

location = var.location

}

resource "tls_private_key" "this" {

algorithm = "RSA"

rsa_bits = "4096"

}

resource "azurerm_linux_virtual_machine" "this" {

name = replace(format("vm-%s", local.name_suffix), "-", "")

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

size = "Standard_F2"

admin_username = "adminuser"

network_interface_ids = [

azurerm_network_interface.this.id

]

admin_ssh_key {

username = "adminuser"

public_key = tls_private_key.this.public_key_openssh

}

os_disk {

caching = "ReadWrite"

storage_account_type = "Standard_LRS"

}

source_image_reference {

publisher = "Canonical"

offer = "UbuntuServer"

sku = "16.04-LTS"

version = "latest"

}

}

As you can see above our locals block is a little more interesting now. We are using the location input variable to help select the shortname for the location which we will use for naming our resources. The suffix is now built up of our input variables which means its extremely dynamic, changing our environment for instance becomes a breeze!

network.tf

resource "azurerm_virtual_network" "this" {

name = format("vn-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

address_space = [var.network.address_space]

}

resource "azurerm_subnet" "this" {

for_each = {

for v in var.network.subnets :

v.role => v.address_space

}

name = format("sn-%s-%s", local.name_suffix, each.key)

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = [each.value]

}

resource "azurerm_public_ip" "this" {

name = format("pip-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

allocation_method = "Dynamic"

}

resource "azurerm_network_security_group" "this" {

name = format("nsg-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

}

resource "azurerm_subnet_network_security_group_association" "this" {

for_each = azurerm_subnet.this

subnet_id = each.value.id

network_security_group_id = azurerm_network_security_group.this.id

}

resource "azurerm_network_interface" "this" {

name = format("nic-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

ip_configuration {

name = "config"

subnet_id = azurerm_subnet.this["iaas"].id

private_ip_address_allocation = "Dynamic"

public_ip_address_id = azurerm_public_ip.this.id

}

}

Our network configuration is now fed by the network input variable, this variable allows the creation of multiple subnets if we so desired!

Now that this is all in place how do we consume this codebase? We do that through the use of tfvar files, you would have a tfvar file for every environment or project that you wanted to build. An example of this is below:

dev.tfvars

location = "australiaeast"

environment = "dev"

project_identifier = "blt"

network = {

address_space = "10.0.0.0/23"

subnets = [

{

role = "iaas"

address_space = "10.0.1.0/24"

}

]

}

prd.tfvars

location = "australiaeast"

environment = "prd"

project_identifier = "blt"

network = {

address_space = "10.10.0.0/23"

subnets = [

{

role = "iaas"

address_space = "10.10.1.0/24"

}

]

}

As you can see we have two environments declared above, these completely change the configuration of the Terraform code without actually touching the code itself... no more copy and paste! You would then use your CI/CD pipelines to select the correct tfvars files and then pass that into the Terraform run. If you now were to make a change to the codebase itself this change would more easily go through your environments and when you are testing or developing in the lower environments you can know with a high level of certainty that if it works in development it's going to work in production just as well! This helps to give not only your engineers more confidence in the codebase but also the business.

Higher-order Modules

Now that we have defined our input variables for our code we could go one step further and turn that code into a module itself. Doing this allows us to strictly version and control the version of our code that is consumed by each environment. In fact we could use either variable files or configuration files to drive the module! For the sake of this example we will statically assign the inputs.

First thing is first we will create the module itself, we will do this locally.

$ mkdir -p $(pwd)/modules/app-server

And now the relevant files for us to store our code:

touch -p $(pwd)/modules/app-server/{main,network,inputs,outputs}.tf

One thing you might notice straight away is the lack of a providers.tf file, we actually do not need one inside our module at all when we call the module we will create an instance of the correct provider at that level and Terraform will sort it all out for us.

For the sake of not making this post 10,000 lines I won't put the code in for the module itself as it would be identical to the code in the Variable Files section, just put into the empty files we created just before. I will instead show how we call the module.

main.tf

provider "azurerm" {

features {}

}

module "dev_app_server" {

source = "./modules/app-server"

location = "australiaeast"

environment = "dev"

project_identifier = "blt"

network = {

address_space = "10.0.0.0/23"

subnets = [

{

role = "iaas"

address_space = "10.0.1.0/24"

}

]

}

}

output "ssh_private_key" {

value = module.dev_app_server.ssh_private_key

sensitive = true

}

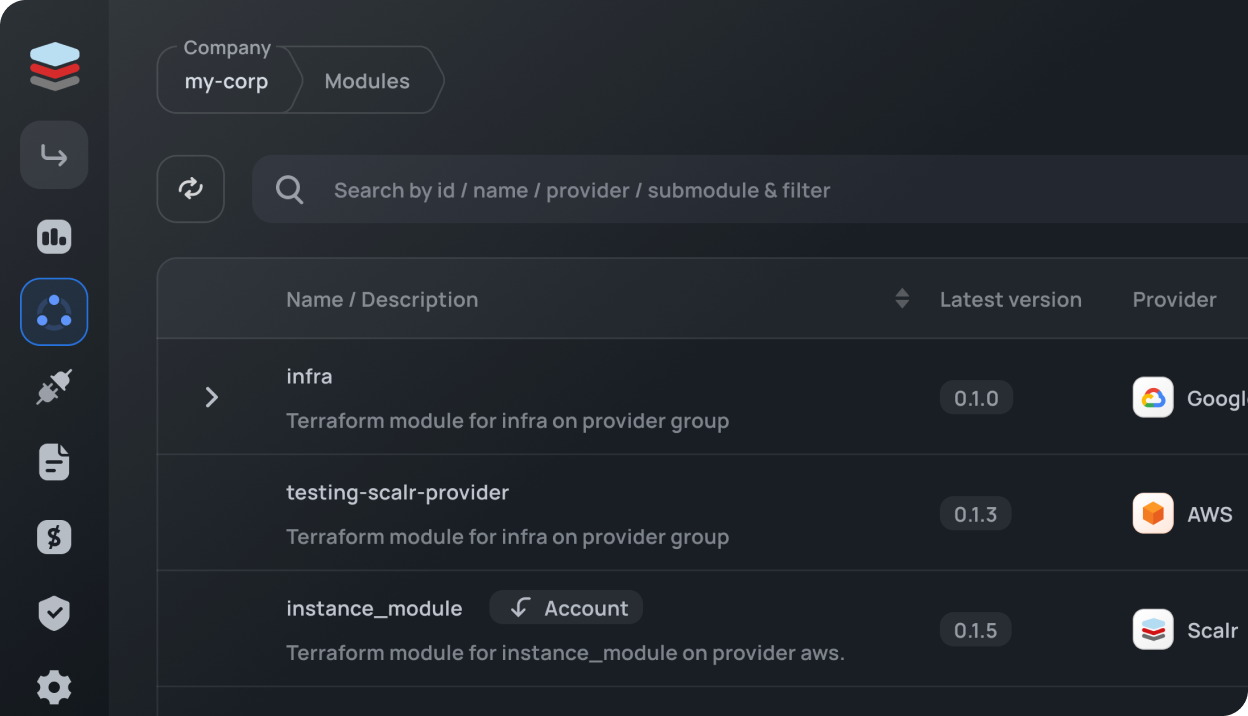

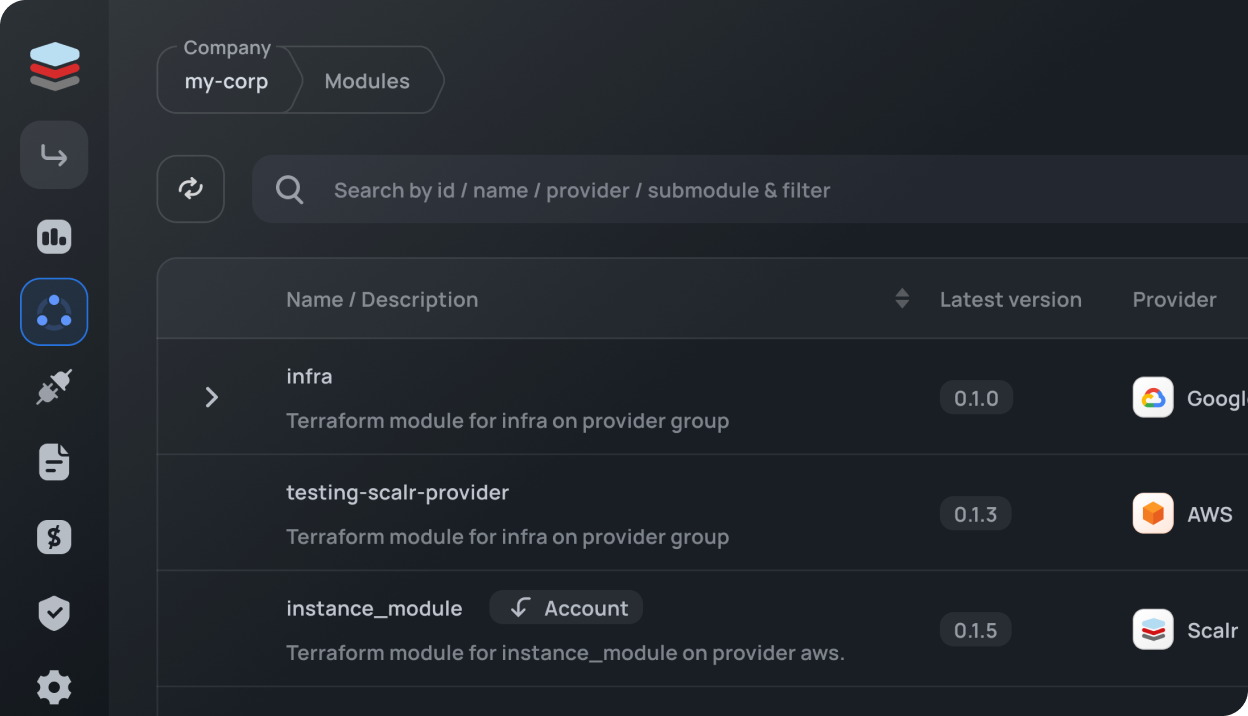

The interface for the module is nice and simple, and is satisfied by passing through everything that we declared as input variables. Using modules allows a fantastic level of control on what the consumers of our code are doing, in this instance the module is defined locally however nothing is preventing us from storing it in git or a module registry like Terraform Cloud.

By creating a module we can actually give more control/self-service to our consumers as all they would need to do in order to create an app-server is defined here, and they would know that it satisfies any organisational requirements, such as security controls.

Configuration Files

The final way to pass in configuration into our Terraform code is via the use of configuration files. Now these files are json or yaml files that we ingest using the yamldecode or jsondecode functions, this will give us dot access to the attributes.

First off lets look at the example yaml file that we will use:

config.yaml

project:

blt:

environments:

dev:

location: australiaeast

network:

address_space: 10.0.0.0/23

subnets:

- role: iaas

address_space: 10.0.1.0/24

prd:

location: australiaeast

network:

address_space: 10.1.0.0/23

subnets:

- role: iaas

address_space: 10.1.1.0/24

A lot of the keys here will look pretty familiar given they represent all of the input variables that we were previously passing into our Terraform code. The advantage of the configuration file approach is that we can house multiple projects in a single file, with all their environments along side it. We could even turn our configuration file into a module itself, or even a provider!

Let's look into our code now to see what's changed and how exactly we are consuming this configuration file.

To start we have our decreased variables.tf this now only holds what I call orientation variables as they allow our Terraform code to orientate itself within the configuration file.

variables.tf

variable "environment" {

type = string

description = <<EOT

(Required) The environment short name for the resources.

EOT

validation {

condition = contains(

["dev", "uat", "prd"],

var.environment

)

error_message = "Err: invalid environment provided."

}

}

variable "project_identifier" {

type = string

description = <<EOT

(Required) The identifier for the project, 4 character maximum

EOT

validation {

condition = length(var.project_identifier) <= 4

error_message = "Err: project identifier cannot be longer than 4 characters."

}

}

Next we have our main.tf which holds the key to consuming our configuration file. Through the use of two local variables raw_config and config we setup our environment.

raw_config — we ingest the config file itself into Terraform and then we use the yamldecode function to convert the yaml into configuration that Terraform can easily work with.config — using our orientation variables and passing those into some lookup functions we set our local.config to be scoped to the project blt for the dev environment.

main.tf

locals {

raw_config = yamldecode(file("./config.yaml"))

config = lookup(

lookup(

local.raw_config.project,

var.project_identifier,

null

).environments,

var.environment,

null

)

location_map = {

"australiaeast" = "aue"

"australiasoutheast" = "aus"

}

name_suffix = lower(format(

"%s-%s-%s",

local.location_map[local.config.location],

var.environment,

var.project_identifier

))

}

...

The last file is our network.tf, I have also truncated the unchanged or boring portions.

network.tf

resource "azurerm_virtual_network" "this" {

name = format("vn-%s", local.name_suffix)

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

address_space = [local.config.network.address_space]

}

resource "azurerm_subnet" "this" {

for_each = {

for v in local.config.network.subnets :

v.role => v.address_space

}

name = format("sn-%s-%s", local.name_suffix, each.key)

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = [each.value]

}

...

The parts to notice above are how we are now consuming the subnets object from our local.config. Below shows the difference between the for loop with our config and previously with the variables:

// Config

resource "azurerm_subnet" "this" {

for_each = {

for v in local.config.network.subnets :

v.role => v.address_space

}

...

}

// Variables

resource "azurerm_subnet" "this" {

for_each = {

for v in var.network.subnets :

v.role => v.address_space

}

...

}

As you can see they look almost identical, so why bother with using configuration files I hear you ask? I have found that using configuration files, especially in larger and more complex projects extremely useful as you can co-locate a lot of configuration information in a single place and then consume that in different places. For instance, if we were to pull out all the networking components to be completed by some central networking component but still wanted to get information about that infrastructure configuration we could consume that out of our configuration file. Perhaps we consume that configuration directly, or the configuration gives us enough information to construct data sources, either way it removes the dependency on the other workspace/codebase. Another example would be perhaps you have a cost center tag and need that on all resources within the blt project, the resources for the project could be scattered over any number of instances of Terraform code using our configuration file it becomes insanely easy to update that config everywhere from a single place!

There are certainly some things to consider however when using this approach:

- Blast Radius — something good can also be something bad, if someone was to mistakenly make a change to a line of configuration it would effect all code consuming that configuration.

- Validation — as we are now consuming

yaml or json we need to ensure some level of validation is done throughout the codebase to ensure that if an invalid (e.g. null) value is passed in the code doesn't break! I think this is actually a benefit in the long run it just takes some upfront development cost.

- Structure — now you must also consider the structure of your configuration and enforce this in some way for all your consumers or could potentially break things. I see this as being solved through easy to consume documentation and/or the use of

json/yaml schema files.

Closing Out

Today we have gone through three ways of ingesting configuration into our Terraform code. The most rudimentary being the tfvar file which allows us to pre-fill the require input variables and pass different instances of those inputs into the same declaration of the Terraform code. Slightly more advanced is the use of a higher-order module where we create a module out of or Terraform code and provide an interface to be fulfilled by our consumers. Finally is the use of the configuration file, such as a yaml file, this method allows for the most flexibility and can also be used in conjunction with the higher-order module!

All in all I wouldn't say there is a specific "you must use Method A every time because reason X" each one offers different benefits and you should select the one that is best suited to your situation!

Hopefully that's provided some valuable insight into getting your configuration into your Terraform code and reducing the amount of duplicated code! As always if you have feedback or would like to know more please feel free to reach out!

You can follow Brendan @BrendanLiamT on Twitter.